Portfolio - Elliott Hall

This portfolio contains excerpts of my creative work in various immersive spaces: XR, theatre, and Virtual Production. My case study is The Digital Ghost Hunt immersive experience, showing every stage of the process, with a few shorter project descriptions below to illustrate how that process is applied in other contexts and formats.

If you're interested in a more in-depth overview of my various projects, as well as the novels I have written, you can find more information on my website.

Case Study: The Digital Ghost Hunt

A ground-breaking fusion of Augmented Reality, coding education, and immersive theatre

Concept, co-creator, technical lead

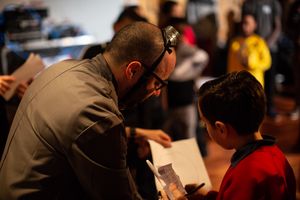

The Digital Ghost begins when a normal day is interrupted by Deputy Undersecretary Quill from the Ministry of Real Paranormal Hygiene (MORPH), recruiting any young children nearby to help her investigate a mysterious haunting in their building. Under her tutelage and Chief Scientist Professor Bray, the young ghost hunters must unravel the mystery of the ghost's haunting with their SEEK ghost detectors, and help to set it free. Meanwhile, the ghost communicates through a mixture of traditional theatrical effects and the poltergeist potential of smart home technology.

More information about the show's world and characters can be found on the project website.

The Digital Ghost was a research project collaboration between children's immersive theatre company KIT Theatre, King's Digital Lab of King's College London, and the University of Sussex. Its four unique productions were initially funded by the AHRC through its new immersive experiences pilot program, then received follow on funding based on the project's strong impact and performance. The last show was commissioned through the Manchester Royal Exchange's Ambassadors program.

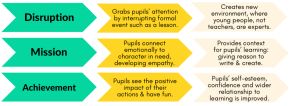

I co-created the experience with Tom Bowtell of KIT Theatre. KIT Theatre’s primary mission is to use immersive theatre to have a transformative impact on disadvantaged young people’s creativity, confidence and critical thinking through immersive experiences in schools.

Research Objectives

- Investigate how immersive technology could be combined with immersive theatre to reconnect people with physical spaces through play, using a building’s history, architecture, and memory.

- Study the 'spontaneous communities' that developed amongst immersive audiences and their dynamics.

- Reframe technology as both imaginative and accessible, especially to groups that are likely to see it as unapproachable.

To these research goals I added some personal objectives: exploring using immersive technology and Internet of Things (IoT) devices in immersive theatre on the practical level - from design and budget to sustainability,and scaring children (in a fun way.)

Background

I started exploring what would become the ghost hunt because I felt that tinkering, DIY spirit of technology was coming under threat from the smartphones and other screens that we use every day but cannot be modified, remixed or disassembled. This lack of understanding of the technology behind the screens has implications not just for the next generation of developers, engineers and game designers, but more profound consequences for us as citizens in an increasingly digital society. The Ghost Hunt seeks to restore making and programming to its proper place not just something you might study for a career, or for just ‘boys’ or ‘middle class kids,’ but as a tool of the imagination. This aspiration to make the technology as accessible as possible informed every step of the design process, from the narrative flow to the technologies used in the experience.

Narrative design process

The Digital Ghost Hunt was designed with techniques from immersive theatre, game design, and software development, joined together through the shared process of iterative development. I started from a perspective more influenced by novels and video games, while Tom structured the show through his Theory of Change model (see the diagram above.) Where we met was a shared desire to put children's agency and creativity at the foreground in every decision, making sure participants drove the narrative and flow of the experience, with facilitators and other adults fading into the background as much as possible.

It was this principle that guided our first and maybe most important storyworld decision: only children can see ghosts. This single fact meant that children were always operating with more information than adults, so the latter had to seek their perspective and advice.

The core Digital Ghost Hunt experience was an investigation in two acts:

Prologue: Arrival and hijacking of the space.

Act I: Who the ghost is and why he/she is haunting the venue (unfinished business)

Act II: Finishing that business and setting the ghost free

Finale: Ghost’s final appearance and thank you.

Audiences were split into small groups of 4-6 - to manage numbers, make the experience more intimate, and for safeguarding reasons - working together towards a common goal. One half of the audience would stay in the HQ doing 'research' - puzzle work with props and fake documents. The other half would go out into the field and look for evidence of the ghost's activities. At the halfway point, everyone would switch, so everyone got to both activities.

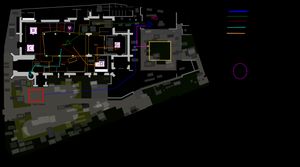

The fieldwork was where most of technology came in. I crated an Ultrawideband(UWB) radio grid that allowed the Seek Detectors carried by the children to see where the ghost had left traces in a room (in reality coordinates and trails drawn in an AR grid.) This assisted them in navigating the spaces, but didn't tell them what they were looking for; that was up to their own investigative skills. I also used simple smart home technologies - smart switches, lights, etc. - driven by Home Assistant running on Raspberry Pis and simple DIY devices like radio-drive solenoids to create classic poltergeist effects - turning things, blinking lights, and pushing things off shelves to trying and communicate with participants. Combined with these were classic theatre techniques such as Pepper's Ghost, custom props, and hiding someone behind a wardrobe to pull on a string. Whether it was digital or analog, whatever worked for the experience was the most important thing.

This initial format was changed over the course of four different productions depending on budget, the size of the building, and audience feedback (see below.) The first show at the Battersea Arts Centre started at a primary school, while the others were in a working theatre, museum, and historical regeneration site (an old spinning mill that was now a cultural centre.) The overall lesson was that the core ghost hunt experience was flexible enough that it could be adapted to a range of formats and venues, and the technology design I had made could be put up in an hour with almost no prerequisites in terms of infrastructure.

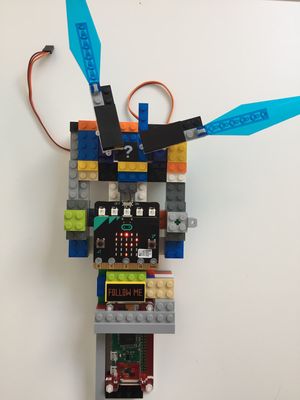

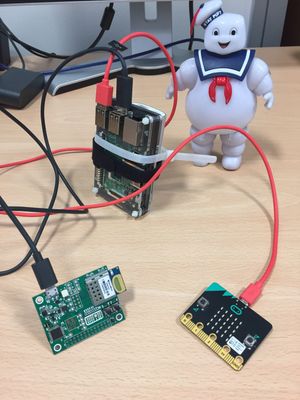

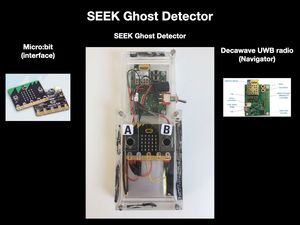

The Seek Ghost Detector

I designed the Spirit Evidence Existence Kit (SEEK) to be self-consciously analog, DIY, and quite chunky, in both its technology and aesthetics; I wanted each piece to be accessible in terms of cost and understanding. For that reason I designed the laser-cut case in Tinkercad, an in-browser 3D design tool aimed at schools, and used the Micro:bit as the device's primary controller. The Micro:bit had been given to every primary school in the UK so it would be familiar to teachers, and was designed to be played with and reprogrammed. My only deviation was the use of Decawave's Ultrawideband (UWB) development board to do indoor location sensing. Experiments using bluetooth, radio, and infrared lasers weren't reliable of flexible enough.

I decided not have a screen from an early stage: I wanted to foreground the physical space, and not have it fighting for attention with a device. I also wanted to create a clear separation between the experience and the games and apps participants used every day on smartphones.

I originally designed the single device to have four different functions:

- GMeter: Finding where the ghost had been by displaying a simple 1-10 number as the device got closer to AR hotspots.

- Ectoscope: A trail finder that worked like a dowsing rod, allowing the participant to follow in the ghost's footsteps.

- Spirit sign: A decoding function that allowed you to 'draw' symbols left by the ghost by tilting the device.

I had guessed that this would stop the investigation bits from becoming repetitive, and prevent a single super-enthusiastic member of a group from doing everything. This turned out to be a reasonable assumption which the audience proved wrong (see below.)

The first show used a chunkier prototype combination of Micro:Bit and Raspberry Pi in a 3D printed shell. It turned out to be unnecessarily complicated, and uncomfortable for the ten year-old audience to wear for the duration of the show.

I redesigned the device into the smaller, lighter version pictured above. The SEEKs' function changed as well as its form factor, as I had the chance to observe audiences engage with it in different venues and formats.

SEEK C++ / Typescript library

I wrote the software library that the students used to program their Micro:bits was written in Typescript and C++. The earlier V1 detector that used a Raspberry Pi was written in Python 3.

This was an interesting challenge as the original Micro:Bit had only 16K of memory (about ten tweets!) and a small amount of storage. So I couldn't have a lot of assets or files, and instead decided to write most things inside the code itself.

Below is a sample, a function would query the UWB board to find any nearby anchors, as well as the device's current location. This would be used by other functions to show proximity to hotspots and trails.

(The full main.cpp file is on the SEEK V2 Core Library Github.)

| /* Query the UWB for our current location, and nearby anchors */ | |

| //% | |

| int currentLoc(){ | |

| if (mode == 0){ | |

| uint8_t RXBuffer[BUFFLEN]; | |

| uBit.serial.send((uint8_t *)GET_LOC, 2); | |

| uBit.sleep(200); | |

| int waiting = -1; | |

| int bufferIndex = -1; | |

| // How much is waiting in the RX buffer? | |

| waiting = uBit.serial.rxBufferedSize(); | |

| if (waiting > BUFFLEN){ | |

| uBit.panic(10); | |

| } | |

| if (waiting > 0){ | |

| uBit.serial.read((uint8_t *)RXBuffer,waiting,ASYNC); | |

| uBit.sleep(200); | |

| bufferIndex = 0; | |

| //Check the return byte and error code | |

| bufferIndex = parseDWMReturn(RXBuffer,bufferIndex); | |

| if (bufferIndex > 0){ // && waiting >= MIN_LOC_RETURN | |

| // Get the tag's location | |

| bufferIndex = parseLoc(pos,RXBuffer,bufferIndex); | |

| //uBit.display.scroll("PL"); | |

| //uBit.display.scroll(bufferIndex,100); | |

| if (bufferIndex > 0){ | |

| bufferIndex = parseAnchors(RXBuffer, bufferIndex); | |

| } else{ | |

| uBit.display.scroll("ERROR DWMPARSELOC"); | |

| } | |

| } else{ | |

| uBit.display.scroll("ERROR DWMReturn"); | |

| } | |

| } else { | |

| uBit.display.scroll("U12"); | |

| } | |

| //int numAnchors = RXBuffer[bufferIndex+2]; | |

| return bufferIndex; | |

| } else if (mode ==1){ | |

| //Set test data and return correct read | |

| pos[0] = 0; | |

| pos[1] = 0; | |

| pos[2] = 0; | |

| currentNumAnchors = 1; | |

| anchors[0][0] = 1234; | |

| anchors[0][1] = 3334; | |

| return 44; | |

| } | |

| } | |

| } |

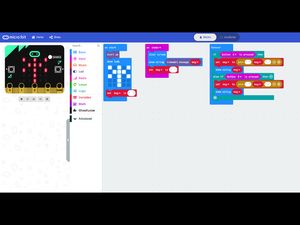

I would then call these C++ libraries in Typescript which was much friendlier to use on the Micro:Bit. Here the Gmeter SEEK (see above) gets its location, displays a little animation (the three showImage lines) and then shows a number showing how close we are to the nearest spooky hotspot.

| if (device_id == SEEKType.GMETER){ | |

| images.iconImage(IconNames.Target).showImage(0); | |

| basic.forever(function () { | |

| if (input.buttonIsPressed(Button.A)) { | |

| currentLoc(); | |

| reading = gMeter(rooms); | |

| images.iconImage(IconNames.SmallDiamond).showImage(0) | |

| basic.pause(200); | |

| images.iconImage(IconNames.Target).showImage(0) | |

| basic.pause(200); | |

| images.iconImage(IconNames.Diamond).showImage(0) | |

| basic.pause(200); | |

| basic.clearScreen() | |

| if (reading > -1) { | |

| basic.showString("" + reading); | |

| } | |

| } | |

| basic.pause(200); | |

| }); | |

| } |

The whole file main.ts is available on the SEEK Application Library repository

I could then expose these typescript functions as blocks in MakeCode, allowing even low level functions written in C++ to be used easily in visual programming.

Audience analysis and lessons learned

- Observation: Observation by trained facilitators, academic partners, and performers. My role in the production was deliberately vague and boring: 'Junior Technician.' The original idea was that I could unobtrusively fix anything that was broken, but it also allowed me to observer how participants engaged with both the narrative and the devices I'd built, especially the SEEK detector.

- Surveys: These were light touch and used by both academics and teachers in school.

- Focus groups and post-show interviews: These recorded interviews allowed us to assess the impact of our work on the audience, and what had been memorable for good or ill.

- Workshops: I ran two in-world coding lessons, ostensibly to prepare students for field work. This allowed me to observe their engagement with the Micro:bits and their attitude to technology in general.

The key insight from this audience feedback for my work as a creative technologist was that there were too many things to do, and it was holding back collaboration. In my eagerness to ensure there were enough things to do for everyone, I had overcomplicated the field work portions of the production. In trying to design how far I could push the interactions in the show I had fallen into a more solitary model of individual achievement, and lost the collaborative play that should have been at the heart of the experience. Observing the audience play showed me the error of my ways.

This insight led to me dropping the spirit sign part of the ghost detector and focusing on a few simple challenges. This coincided with the third production at the garden museum: designed to be a museum late, it was much shorter than the production at York Theatre Royal, 45 minutes compared to an hour and a half. This leanness allowed participants to all work together rather than on separate bits of evidence, and created a feedback loop increasing the immersion for all of them.

Media, publications and resources

- Featured in Immersive Arcade's Best of British since 2001.

- Tom Bowtell and Elliott Hall wrote about their work on the Digital Ghost Hunt for Raspberry Pi's Hello World magazine (Issue #9).

- Listen to an episode of the Exchange's podcast Connecting Tales discussing the Spinner's Mill production, with Tom, Elliott, and Leigh Ambassador (and part time ghost) Mike Burwin.

- I've spoken about The Digital Ghost Hunt at academic, game, and media events, including the conference paper ‘The Digital Ghost Hunt: A New Approach to Coding Education Through Immersive Theatre.’ delivered at the Digital Humanities conference in Mexico City (2018), a talk with Tom Bowtell at the Continue Annual Conference @ York Mediale (2018) and as part of the UKRI US Immersive Mission to South by Southwest (SXSW) in 2019.

Bridge XR (work in progress)

Immersive play across boundaries of geography and accessibility

Concept, co-creator, technical lead

BridgeXR is a new model of XR experiences that leverages real time engines, Digital Twins, and the Internet of Things (IoT) to create an immersive storyworld across physical and virtual space, crossing boundaries of accessibility, health, and geography. Its core objective is to make immersive theatre more accessible through immersive technology. An app built in Unreal Engine allows participation in in-person experiences, where participants may not be able to attend due to health issues, or simply living far away. Using a desktop and VR app developed in Unreal Engine 5, they will be able to remotely open doors, turn on devices, and distract security, all while being able to see what is happening in the room in real time via indoor location sensing. Their role isn't a consolation but a vital complement to the action in the room, and could just as easily be played by someone next as on the other side of the world.

It was inspired by my earlier work with KIT Theatre on The Digital Ghost Hunt, and a production they made in partnership with Great Ormond Street Children's Hospital and

The project completed a proof of concept in 2022, producing a live demo with six young players. The lessons learned from the demo are being applied to our first prototype production: A Robot Escapes. Inspired by A Robot Awakes, Escapes is an escape room turned inside out: players must break into the government lab, restore the robot, and then help her escape. Patients play an escape room with their friends and family without ever leave their hospital rooms.

A Robot Escapes. is currently in the prototype stage, being developed with incubation support from King's Digital Lab.

Mirroring the virtual and real (in Unreal)

What enables the interactions between Agents in the field and Operators working virtually is the ability to translate real-world location data into virtual activity in Unreal Engine, and (in a more limited way) vice versa. This mirroring allows virtual participants to see physical people moving around in real time, with that movement expressed through virtual avatars.

The first part of this challenge is to get the location data provided by the UWB radios into Unreal. Data from the sensors is collected by a listening node on a Raspberry Pi in the room, and published as a MQTT topic. The Unreal application subscribes to these topics (with the help of the MQTT plugin from Nineva studios).

But this location data has to be translated into Unreal world space, and this is where the translation component comes in.

| #include "Uwb/UWBTranslationComponent.h" | |

| #include "Uwb/UwbGridData.h" | |

| // Sets default values for this component's properties | |

| UUWBTranslationComponent::UUWBTranslationComponent() | |

| { | |

| // Set this component to be initialized when the game starts, and to be ticked every frame. You can turn these features | |

| // off to improve performance if you don't need them. | |

| PrimaryComponentTick.bCanEverTick = false; | |

| // We're getting metres so convert to cms | |

| MessageConversionScale = 100; | |

| } | |

| // Called when the game starts | |

| void UUWBTranslationComponent::BeginPlay() | |

| { | |

| Super::BeginPlay(); | |

| } | |

| void UUWBTranslationComponent::AddUwbGrid(FUwbGridData NewGrid) | |

| { | |

| if (!UwbGrids.Contains(NewGrid)) | |

| { | |

| UwbGrids.Add(NewGrid); | |

| } | |

| } | |

| /** | |

| *Transform a UWB position received from the listener to the world position | |

| *in Unreal space. | |

| * | |

| * Use the reference anchors to understand our relative orientation in world space | |

| * then apply the uwb position as an offset to the origin, + or - | |

| * Finally apply scale of room | |

| * | |

| *@param UwbPosition received position in UWB space | |

| *@return FVector translated position in world space | |

| */ | |

| FVector UUWBTranslationComponent::UwbToWorldPosition(const FUwbPosition UwbPosition, FUwbGridData& GridData) | |

| { | |

| FVector WorldPosition = FVector::ZeroVector; | |

| for (FUwbGridData Grid : UwbGrids) | |

| { | |

| if (UwbPosition.GridName == Grid.GridName) | |

| { | |

| GridData = Grid; | |

| FVector UwbVector = FVector(UwbPosition.X, UwbPosition.Y, UwbPosition.Z); | |

| WorldPosition = TranslateUWBPosition( | |

| UwbVector, Grid, MessageConversionScale); | |

| } | |

| } | |

| return WorldPosition; | |

| } | |

| FVector UUWBTranslationComponent::FindZFloor(UWorld* World,const FVector StartTrace, const FUwbGridData GridData, | |

| float TraceLength, | |

| TEnumAsByte<ECollisionChannel> CollisionChannel) | |

| { | |

| FVector EndTrace = StartTrace; | |

| FVector WithZ = StartTrace; | |

| WithZ.Z = 0.0f; | |

| EndTrace.Z -= TraceLength; | |

| FCollisionObjectQueryParams QueryParams; | |

| QueryParams.AddObjectTypesToQuery(CollisionChannel); | |

| FHitResult OutHit; | |

| //,const FVector& End,ECollisionChannel TraceChannel,const FCollisionQueryParams& Params = FCollisionQueryParams::DefaultQueryParam | |

| if (World->LineTraceSingleByChannel(OutHit, StartTrace, EndTrace, CollisionChannel)) | |

| { | |

| FVector FloorLocation = OutHit.ImpactPoint; //OutHit.Component->GetComponentLocation(); | |

| WithZ.Z = FloorLocation.Z; | |

| } | |

| else | |

| { | |

| UE_LOG(LogTemp, Warning, TEXT("FindZFloor Failed for start trace at: %f, Y:%f, Z:%F"), StartTrace.X, | |

| StartTrace.Y, StartTrace.Z); | |

| } | |

| return WithZ; | |

| } | |

| FVector UUWBTranslationComponent::TranslateUWBPosition(const FVector& UwbPosition, const FUwbGridData GridData, | |

| float UwbMessageConversionScale) | |

| { | |

| float X = UwbPosition.X * UwbMessageConversionScale * GridData.RoomScale.X; | |

| float Y = UwbPosition.Y * UwbMessageConversionScale * GridData.RoomScale.Y; | |

| float Z = UwbPosition.Z * UwbMessageConversionScale * GridData.RoomScale.Z; | |

| if (GridData.RefX.X < GridData.Origin.X) | |

| { | |

| X = X * -1; | |

| } | |

| if (GridData.RefY.Y < GridData.Origin.Y) | |

| { | |

| Y = Y * -1; | |

| } | |

| if (GridData.RefZ.Z < GridData.Origin.Z) | |

| { | |

| Z = Z * -1; | |

| } | |

| float WorldX = GridData.Origin.X + X; | |

| float WorldY = GridData.Origin.Y + Y; | |

| float WorldZ = GridData.Origin.Z + Z; | |

| // Add our values to the world position of the grid's origin to find out world positon | |

| return FVector( | |

| (WorldX), | |

| (WorldY), | |

| WorldZ | |

| ); | |

| } | |

| UUWBTranslationComponent* UUWBTranslationComponent::GetUwbTranslationComponent(AActor* Owner) | |

| { | |

| return Owner->FindComponentByClass<UUWBTranslationComponent>(); | |

| } |

What allows this to work is the UWB sensor grid Actors in unreal are placed in the virtual room exactly matching where they are in the digital twin of the physical room. So the component above can use the world space locations of these virtual sensors as a kind of 'Rosetta Stone' to translate the real, physical sensor readings relative to their world positions.

Room is Sad appeared at the 2023 London Design Biennale, created in association with Charisma AI and King's Culture. The exhibition was designed by Alphabetical Design. It was seen by over a thousand people at the Biennale and during its remount at Bush House, King's College London.

For a full discussion of the project and its background, see a post I wrote on the King's Digital Lab blog about its development.

Room is Sad is an immersive experience that uses AI and the Internet of Things (IoT) to tell the story of a smart room that isn’t feeling quite itself. It's feuding with the desk upon which its laptop brain sits, and it's worried that it's best friend -- the plastic plant on the shelf -- isn't talking to it. The participant wanders into this tangle of inanimate object angst. They can be an impartial observer, a peacemaker, or deliberately cause trouble. It’s up to them.

Room is Sad was designed as a humorous, accessible way to engage both an academic and general audience on questions of identity, privacy, and intelligence.

The Technology

- Charisma AI. A branching storytelling engine that uses Natural Language Processing (NLP) to understand users and serve responses. This is how the Room talks and understands players.

- Unreal Engine 5. Serves as the director of the experience, tying everything together with a beautifully terrible interface straight from 1994. It does the UI and passes messages to Charisma AI through itsSDK and to devices in the room via MQTT with plugin for MQTT communication.

- Internet of Things (IoT). Smart bulbs run by a smart home platform called Home Assistant. UWB radios give me a precise location for the player and some props (the plant) using sensors. This gives the room a physical sense, and can react based on the participant's movements and those of objects in the room.

- AI Guest Stars First, I used Stable Diffusion to visually represent its mood and subconscious (below) changing based on the interactions with the audience. OpenAI's Chat GPT-4, served as its therapist, but I didn't use it to write any of Room itself.

Room uses the same plugin library as BridgeXR to translate uwb sensors into unreal, in this case to allow Unreal Engine to inform the AI in Charisma about the movements of people and objects in the room. In both projects, I affect actors with UWB and IoT calls from MQTT by attaching an MQTTActorComponent that listens to delegates from the MQTTManagerComponent attached to the game mode, which is subscribed to the topics.

| #include "MqttActorComponent.h" | |

| #include "Serialization/JsonSerializer.h" | |

| #include "Serialization/JsonReader.h" | |

| #include "BridgeARGameMode.h" | |

| #include "Kismet/GameplayStatics.h" | |

| #include "MQTTManagerComponent.h" | |

| UMqttActorComponent::UMqttActorComponent() | |

| { | |

| DeviceName = TEXT("Mqtt1"); | |

| } | |

| // Called when the game starts | |

| void UMqttActorComponent::BeginPlay() | |

| { | |

| Super::BeginPlay(); | |

| } | |

| void UMqttActorComponent::InitializeComponent() | |

| { | |

| Super::InitializeComponent(); | |

| } | |

| UMQTTManagerComponent* UMqttActorComponent::GetMqttManager() | |

| { | |

| AGameModeBase* GameMode = UGameplayStatics::GetGameMode(GetWorld()); | |

| if (GameMode) | |

| { | |

| return UMQTTManagerComponent::GetMqttManagerComponent(GameMode); | |

| } | |

| return nullptr; | |

| } | |

| void UMqttActorComponent::AttachToMqttManager() | |

| { | |

| // subscribe to mqtt events | |

| if (UMQTTManagerComponent* ManagerComponent = GetMqttManager()) | |

| { | |

| ManagerComponent->OnConnect.AddDynamic(this, &UMqttActorComponent::OnConnect); | |

| ManagerComponent->OnMessageReceived.AddDynamic(this, &UMqttActorComponent::OnMqttMessageReceived); | |

| } | |

| } | |

| void UMqttActorComponent::SubscribeToTopics() | |

| { | |

| if (UMQTTManagerComponent* ManagerComponent = GetMqttManager()) | |

| { | |

| for (FMqttSubscriptionTopic Topic : TopicsToSubscribe) | |

| { | |

| ManagerComponent->SubscribeToTopic(Topic); | |

| } | |

| } | |

| } | |

| void UMqttActorComponent::OnConnect_Implementation() | |

| { | |

| //UE_LOG(LogTemp, Log, TEXT("Connected to MQTT broker")); | |

| SubscribeToTopics(); | |

| } | |

| void UMqttActorComponent::OnMqttMessageReceived_Implementation(FBridgeMqttMessage Message) | |

| { | |

| // Check if MQTT topic is being watched by this component | |

| for (FMqttSubscriptionTopic Topic : TopicsToSubscribe) | |

| { | |

| if (Topic.Topic.Equals(Message.Topic)) | |

| { | |

| //UE_LOG(LogTemp, Log, TEXT("Received MQTT message: %s"), *Message.Payload); | |

| // if so, split into json | |

| FMqttJSONMessage SubscribedMessage; | |

| SubscribedMessage.Json = MessageToMap(Message.Message); | |

| SubscribedMessage.Topic = Message.Topic; | |

| SubscribedMessage.MqttMessage = Message; | |

| OnReceiveSubscribedMessage.Broadcast(SubscribedMessage); | |

| } | |

| } | |

| } | |

| /** | |

| * @brief Publish the IoT message via the Owned Director object | |

| * @param Topic | |

| * @param msg | |

| */ | |

| void UMqttActorComponent::PublishMqttMessage(FBridgeMqttMessage Message) | |

| { | |

| if (UMQTTManagerComponent* ManagerComponent = GetMqttManager()) | |

| { | |

| ManagerComponent->PublishMessage(Message); | |

| } | |

| } | |

| TMap<FString, FString> UMqttActorComponent::MessageToMap(FString MqttMesssge) | |

| { | |

| TMap<FString, FString> MessageMap; | |

| if (MqttMesssge.IsEmpty()) { return MessageMap; } | |

| TSharedPtr<FJsonObject> JsonObject; | |

| TSharedRef<TJsonReader<>> Reader = TJsonReaderFactory<>::Create(MqttMesssge); | |

| if (FJsonSerializer::Deserialize(Reader, JsonObject)) | |

| { | |

| TMap<FString, TSharedPtr<FJsonValue>> JsonValues = JsonObject->Values; | |

| for (const auto& Element : JsonValues) | |

| { | |

| FString FieldName = Element.Key; | |

| FString JsonValue = JsonObject->GetStringField(FieldName); | |

| MessageMap.Add(FieldName, JsonValue); | |

| } | |

| } | |

| else | |

| { | |

| UE_LOG(LogTemp, Warning, TEXT("JSON Parse Failed!")); | |

| } | |

| return MessageMap; | |

| } |

The result is that the Room AI can be informed by Unreal (via MQTT) when things change in the room, affecting its mood accordingly.

In the clip below, the Room asks the human to bring the plastic plant (its best friend) closer to it, and registers when the person has done so. (You can see the mood portrait change.)

Glow: Illuminating Innovation

Creative Technologist

A multi-sited London exhibition, showcasing groundbreaking artworks by leading women artists working with cutting-edge technologies. From early AI explorations in the 1980s to Virtual Reality (VR) in the 1990s, to virtual world creation in the 2000s, and portable VR in the 2010s, GLoW: Illuminating Innovation chronicled the often overlooked impact of women on the evolution of technology.

I was a supporting technologist for this project, working with four artists who were commmissioned to create new work. My work (with colleague Neil Jakeman at King's Digital Lab) focused on giving advice on immersive XR technologies, from real time engines like Unreal and Unity to WebXR frameworks, as well as scripting in Python. The real challenge was evaluating these technologies from a creative perspective, in conversation with the artists' ir work as it evolved, trying to find the right mix of tools that would be effective, sustainable, and live up to their creative vision.

More on the artists and the project at the GLOW website

Stem Cell City (beta)

The majority of my development work in GLOW was for the artist Yarli Allison's work Stem Cell City. Yarli's work uses video, 2D art (and some VR experiments) as well as physical sculpture.

Yarli didn't have a lot of experience using the technologies available in GLOW, so the initial stages of our collaboration were several technical experiments, beginning in Nottingham University's Virutal and Immersive Production Studio, that would giver her a chance to play with various immersive technologides and better understand which would fit best with her artistic vision. I worked with YArli during these experiments, to try and find some combination of technologies that would fulfill our criteria:

- Be accessible to Yarli (technically) so they could use them in their practice and understand how to get the most out of the technologies with our support.

- Be sustainable in the longer term, so Yarli could use and remix what was made without our help after the project ended.

- Fit the format of the GLOW exhibition, in terms of space and hardware available.

- Be accessible to our expected audience, casual foot traffic on the Strand in London.

Our audience was expected to be pretty broad and casual, in the sense that the exhibition wanted to appeal to those just passing by on the street as much as people who came explicitly to see the works. So asking the audience to download and app or go through a long onboarding experience would have put up a significant barrier to engagement.

Although we did some experiments in Unity, we settled pretty quickly on doing something in WebXR. As it's in-browser there would be no downloads and it would be compatible without almost any modern smart device. Yarli gave me a series of 2D assets she's been building as well as some 3D models she'd made that were processed by my colleageu Neil Jakeman to make them more web-friendly. From these I built a virtual experience according to her designs, a multi-room clinic that was part of a feminist utopia, using A-Frame and Three.js.

The assets made use of a lot of animated gifs: for TV displays, characters, and interactions. These don't work very well inside the A-Frame context, so as part of the development I wrote several custom A-Frame components, including the one below which essentially takes the single frames of the gis and applies them as textures to an object like a flipbook.

| /** | |

| Load a series of images as the texture of the entity | |

| files are loaded on start using these properties | |

| urlPrefix+index+.+fileType | |

| to make a url like /assets/monitor_1.jpg | |

| */ | |

| AFRAME.registerComponent('texture-flipbook', { | |

| multiple: true, | |

| // Selector for image src for detail | |

| schema: { | |

| urlPrefix: {type: 'string', default: '/stemcellclinic/public/assets/stemcellcity/bedroom/monitor_0'}, | |

| fileType: {type: 'string', default: 'png'}, // used as filename suffix, so make sure it matches your file | |

| startFrame: {type: 'int', default: 1}, | |

| endFrame: {type: 'int', default: 1}, // Included with loop | |

| speed: {type: 'int', default: 500}, | |

| repeat: {type: 'boolean', default: true}, | |

| startOnLoad: {type: 'boolean'} | |

| }, | |

| init: function () { | |

| this.imageArray = []; | |

| this.index = 0; | |

| this.playing = false; | |

| this.loadImages(); | |

| }, | |

| /** Loads in sequence using start and end frame, if one is missing we stop with a warning */ | |

| loadImages: function () { | |

| const loader = new THREE.TextureLoader(); | |

| this.imageArray = []; | |

| for (let x = this.data.startFrame; x <= this.data.endFrame; x++) { | |

| const imageURL = this.data.urlPrefix + x + "." + this.data.fileType; | |

| const imageFile = loader.load(imageURL); | |

| if (imageFile) { | |

| this.imageArray.push(imageFile); | |

| } else { | |

| console.log(imageURL + " not found for texture flipbook! Stopping load"); | |

| break; | |

| } | |

| } | |

| }, | |

| play: function () { | |

| const mesh = this.el.getObject3D("mesh"); | |

| this.material = mesh.material; | |

| // We store this so we can revert to starting state | |

| this.defaultMap = mesh.material.map; | |

| if (this.data.startOnLoad) { | |

| this.playFlipbook(); | |

| } | |

| }, | |

| revert: function () { | |

| this.material.map = this.defaultMap; | |

| this.material.needsUpdate = true; | |

| }, | |

| playFlipbook: function () { | |

| this.playing = true; | |

| this.flipbookInterval = setInterval(e => { | |

| if (this.playing) { | |

| this.nextFrame(); | |

| } | |

| }, this.data.speed); | |

| }, | |

| nextFrame: function () { | |

| if (this.index >= this.imageArray.length) { | |

| this.index = 0; | |

| this.finishFlipbook(); | |

| return; | |

| } | |

| this.material.map = this.imageArray[this.index++]; | |

| this.material.needsUpdate = true; | |

| }, | |

| finishFlipbook: function () { | |

| if (!this.data.repeat) { | |

| this.stopFlipbook(); | |

| } | |

| this.el.emit('onFlipbookFinished', { | |

| id: this.id, | |

| repeat: this.data.repeat, | |

| endFrame: this.data.endFrame | |

| }, false); | |

| }, | |

| /** Stop animation but leave the frame index where it is */ | |

| pauseFlipbook: function () { | |

| clearInterval(this.detail.id); | |

| this.playing = false; | |

| }, | |

| /** Stop animation and reset the index */ | |

| stopFlipbook: function () { | |

| clearInterval(this.flipbookInterval); | |

| this.index = 0; | |

| this.playing = false; | |

| } | |

| }); |

Other components I made, including interactions components to interact with posters, radios, and even a sleeping patient (to play a cell division minigame) are in the project's repository.

Before/After

Writer, Technical Artist

This short film was the final assignment of the Virtual Production certificate course I took at the National Film and Television School. We built an environment to be used as backdrop on the NFTS volume and then filmed a very short (2-page) script with actors. It gave us a chance to not only do the technical work, but have actual experience of working on a VP set under a heavy time pressure - we have just under four hours to setup, rehearse, and film everything.

I collaborated with some brilliant fellow students with a range of skills - director, cameraman, VFX artist, and designer. We created the concept together, and then I wrote the screenplay. Telling a story in essentially one location in 2 minutes was a real challenge, and I also wanted to leverage some of the creative potential of having a realtime background by making a story that allowed it to radically change. I also helped to create the environment, making sure it was stress-tested and efficient so we didn't have any nasty surprises.

As part of the course I also created a short film in Unreal, a previs is based on a screenplay, The Invasion, I co-wrote with Alex Walker.